Projects

The projects in the Language and Computation in Neural Systems group can be divided in three core themes: formal theory, computational modelling, and experimental work. Of course, our projects are highly connected. Please see below for more details.

- Formal Theory

-

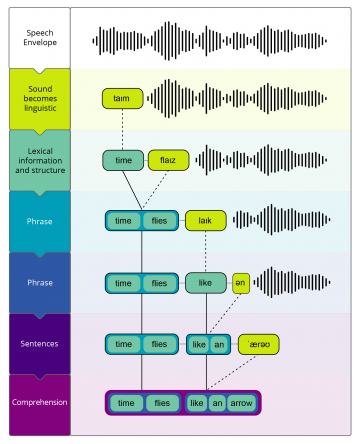

Language is built on hierarchical structures, starting from sensory information and moving up towards the comprehension of meaning. We model how lexical and phrase-level structures are extracted from ongoing speech, with a focus on formalization, and on the hybridization of statistical and symbolic models. On the computational level, we aim to derive theories that can produce representations that meet the formal requirements of natural language. On the algorithmic level, we use biologically-inspired mechanisms that are validated against neuroscientific findings. Ultimately, we aim to derive a formal model of how brain mechanisms can implement hierarchical linguistic structure via perceptual inference - generating linguistic structures in a neurophysiological system. Relevant papers: Martin, 2016, 2020; Martin & Baggio, 2019.

Neural system properties that we assume are key to the computational implementation of language in the brain are oscillations and inhibition. Oscillations are key in segmenting and parsing incoming sensory input, but also for combining and separating representations that are critical for linguistic structure. Inhibition serves to select and control representations and their dimensionality during structure generation. Relevant papers: Martin, 2020; Martin & Doumas, 2017, 2019a, 2019b; Meyer, Sun, & Martin, 2019

- Computational Modelling

-

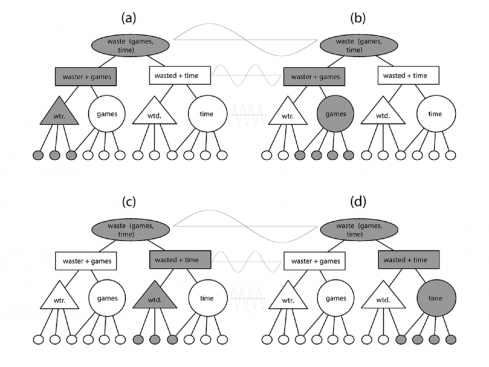

In our computational modeling work we try to specify our formal models in computational terms. We are convinced that by being explicit about your model and theory we can make better science (see Guest & Martin, 2020). Much of our work is specification focused, but we also focus on combining contemporary language models with principles of symbolic computation in order to achieve the formal properties that language requires (see Martin & Baggio, 2019; Martin & Doumas, 2017, 2019a, 2019b). We also collaborate closely with Dr. Leonidas A. A. Doumas at the University of Edinburgh, who developed the symbolic-connectionist model Discovery of Relations by Analogy (DORA; Doumas, Hummel, & Sandhofer, 2008). In DORA, rhythmic computation is used to separate and combine predicates and arguments. The model does this by activating representations at different moments of a rhythmic computational cycle which results in independence between a variable and its value, or predicate and its argument, or a word and a phrase. We have shown that this model is capable of explaining how the brain forms structured representations of language (Martin & Doumas, 2017).

This, and other models in development in the group guide us in making informed predictions that we can test with experiments that measure brain responses, but largely function to help us build explanatory and mechanistic theories of language.

- Experimental Work

-

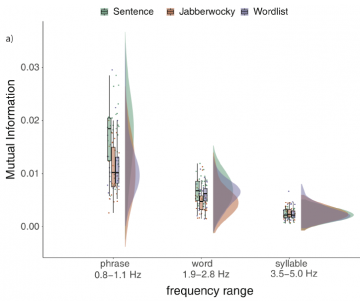

We aim to test the predictions that arise from our formal theoretical and computational models. For example, a prediction from using rhythmic computation for linguistic structure building is that lower frequencies should be stronger when more abstract linguistic structures are present. Kaufeld et al. (2020) tested this prediction and found that low-frequency tracking of sentences - compared to both a prosodically-matched jabberwocky control, and a word list lexical control - is stronger. Relevant pre-prints and papers: Brennan & Martin, 2019; Kaufeld et al, 2020.

We continue testing the predictions flowing from out models which will also guide our future modeling work.

Share this page