Questions and Answers

Is there something you have always wanted to know about language? We might have an answer! On this page we answer questions about various aspects of language asked by people outside of the language researcher community.

[ Nederlands, Deutsch ]

- Why do bilingual stroke patients sometimes recover only one language?

-

When a person suffers from a stroke, the blood flow to certain areas is rapidly disturbed either because a blood vessel starts bleeding (haemorrhage) or because a blood vessel is blocked. If the stroke affects brain areas which are important for language, then the language functions can be partially or fully lost. This condition is called aphasia, but it is sometimes reversible at least to some degree with time, treatment and rehabilitation. Bilinguals, that is, people who speak more than one language, are known to recover from aphasia in a number of different ways. The most common case is when the bilingual patient similarly recovers both languages equally well as in parallel aphasia. In some cases, however, the recovery of bilingual is disproportionately favouring one of his/her languages and this type of recovery is called selective aphasia.

The case of selective aphasia made researchers initially believe that each language of a bilingual person must be located in a different brain area, given that one language recovers better than the other after the stroke. However, with the help of brain imaging scans we now know that this idea is not correct. On the contrary, when a person speaks many languages, they all activate a common network of brain areas. While the whole picture of how the brain generates multiple languages is still partly unclear, we do know a number of factors that seem to influence to what extent the languages of a bilingual patient will recover after a stroke. If a person is less proficient in one of the two languages, this language may not recover as good as the more proficient language. This means that the more automatic a skill is, the easier it is to recover it, whereas something that takes effort such as language that one only speaks rarely is harder to recover. Social factors and emotional involvement are also important if we want to understand which language will recover after a stroke, for instance how often a specific language is used, or what emotions are associated with a specific language. However, it is still unclear exactly how these factors interplay in predicting the recovery success.

One of the current theories on why bilingual aphasics may disproportionately recover one language better than the other suggests that this happens when the stroke damages specific control mechanisms in the brain. When a bilingual person knows two languages, he/she needs to suppress or ‘switch off’ one of the languages while using the other language. If the mechanisms that control this switch are damaged during the stroke, the aphasic patient may no longer be able to similarly recover both languages as the ability to control the language use has been lost. In this case the person may appear to have completely lost one of the languages, but the problem is actually one of control. Recently researchers found that the control mechanisms are more impaired in bilinguals with selective aphasia who recover only one language than in bilinguals with parallel aphasia who recover both languages. Interestingly, when languages recover after stroke, the connections between language and control areas in the brain are re-established. While this interesting finding supports the theory linking selective aphasia to impaired control mechanisms, it is only one of several theories and researchers are currently trying to better understand what other causes may also underlie the surprising recovery patterns that can be seen in bilingual aphasia.

Written by Diana Dimitrova and Annika Hulten

Further reading:

Fabbro, F. (2001). The bilingual brain: Bilingual aphasia. Brain and Language, 79(2), 201-210. pdf

Green, D. W., & Abutalebi, J. (2008). Understanding the link between bilingual aphasia and language control. Journal of Neurolinguistics, 21(6), 558-576.

Verreyt, N. (2013). The underlying mechanism of selective and differential recovery in bilingual aphasia. Department of Experimental psychology, Ghent, Belgium. pdf

- At what age should children start using simple sentences?

-

Children differ in their learning strategies and developmental patterns. Therefore age “benchmarks (for example, "first words appear around 12 months") only represent a very general average. It is completely normal for children to develop a skill either a few months earlier or a few months later than the “benchmark” age. With that in mind, we can summarize the general pattern for how children learn to use simple sentences.

Simple sentences start appearing in children's speech when they are 30 to 36 months old. To some, this might seem quite late, considering that children's first words appear around 12 months of age. But before they can string words into sentences, children need to acquire some basic knowledge about their language's grammar. For example, we think of nouns and verbs as the building blocks of sentences ("cat"+"want"+"milk" = "The cat wants some milk"), but even very simple sentences often require the speaker to add other words ("the"/"some") and inflections ("want"->"wants"). Learning function words and inflections is not trivial because, unlike content words like "cat" and "milk", children cannot experience the meaning of "the", "some", and "-s". To make things even more complicated, the words need to be put in the right order and said aloud with a melody and emphasis that matches the intended meaning (compare "The CAT wants some milk" vs. "The cat wants some MILK"). Managing all of this, even for simple sentences, can be difficult for young children.

But even though children under 30 months do not yet produce full sentences, they do learn how to combine words in other ways. For example, soon after their first birthdays, many children begin to combine words with gestures such as pointing, showing, and nodding. The combination of a word and a gesture (for example, the word "milk" + a nod) says more than just the word or the gesture alone. In fact, some studies suggest that these early word-gesture combinations are linked to children’s development of two-word combinations just a few months later.

From around 18 months, children begin to use two-word sequences like "Bear. Trolley." to express sentence-like meanings (in this case: "The bear is in the trolley”). At first, the words in these sequences sound unconnected, like individual mini-sentences of their own. But as children get more practice, the pauses between words get shorter and the melody of each word seems more connected to the next one.

At 24 months, when children are at the heart of this "two-word stage", they often produce sequences of 2–3 words that sound like normal sentences, only with most of the function words and inflections left out ("There kitty!") At this stage, some children even consistently place the words in a specific order, for example, with "pivot" words ("more", "no", "again", "it", "here") only being used in the first position for some children ("more apple", "here apple") and the second position for others ("apple more", "apple here"). As children begin to use shorter pauses, more consistency in word order, and speech melodies that join the individual words together, they show evidence that their word sequences were planned as a single act: a sentence.

Even when children finally begin producing simple sentences around 30–36 months, they still have a lot to learn. Their ideas about how to combine words into longer sequences are not yet adult-like, and rely quite a bit on what they hear most often around them. Marking words with the correct inflections can be complicated, and it is not uncommon for children to make errors as they learn which inflections are regular (walk, walks, walked...) and which are irregular (am, is, was...). In the 2 years following the onset of simple sentences, children continue to make huge advances in their vocabulary size and use of grammatical marking (among other things). Their sentences become more elaborate with age and, by the time they are 4–5 years old, they have learned a lot of what they need to know to communicate fluently with others.

If you would like to learn more about language development please have a look at the website of Nijmegen's Baby & Child Research Center.

By Marisa Casillas & Elma Hilbrink

Further reading:

Clark, E. V. (2009). “Part II: Constructions and meanings”. In First language acquisition (pp. 149–278). Cambridge University Press.

Iverson, J. M., & Goldin-Meadow, S. (2005). Gesture paves the way for language development. Psychological science, 16(5), 367-371.

- What's the link between language and programming in the brain

-

Siegmund et al (2014) were the first to empirically investigate the link between programming and other cognitive domains, such as language processing, at least using modern neuroimaging methods. They used functional magnetic resonance imaging (fMRI) which measures changes in local blood oxygenation, as a result of brain activity in different networks across the brain. Undergraduate students of computer science were scanned while reading code snippets for comprehension and while reading similar code, looking for syntax errors without comprehension. The results showed activation in the classical language networks, including activation in Broca's, Wernicke's and Geschwind's territories, more in the left hemisphere.

The code was made to enhance so called bottom-up comprehension, which means reading and understanding expression by expression and line by line, rather than browsing the overall structure of the code. This process can be compared to language processes such as clipping words together, according to syntax, to arrive at a coherent sentence meaning (as well as connection meaning across sentences in discourse). It is possible, and has been suggested in the computer science literature on the skill set needed to be a good programmer (Dijkstra, 1982), that people master their native language well are also more efficient software developers. A mechanistic explanation could be the strength of the connections between the mentioned brain regions, which changes from person to person. In summary, similar brain networks are found for programming and language comprehension.

Answer by: Julia Udden, Harald Hammarström and Rick Jansen

Further reading:

Siegmund, J., Kästner, C., Apel, S., Parnin, C., Bethmann, A., Leich, T., Saake, G. & Brechmann, A. (2014) Understanding Source Code with Functional Magnetic Resonance Imaging. In Proceedings of the ACM/IEEE International Conference on Software Engineering (ICSE).

Dijkstra. How Do We Tell Truths that Might Hurt? In Selected Writings on Computing: A Personal Perspective, 129–131. Springer, 1982.

- What is the similarity between learning a natural language and learning a programming language?

-

Programming languages are usually taught to teenagers or adults, much like when learning a second language. This kind of learning is called explicit learning. In contrast, everyone’s first language was learned implicitly during childhood. Children do not receive explicit instructions on how to use language, but learn by observation and practice. Part of what allows children to do this is the interactive nature of language: people ask questions and answer them, tell others when they don’t understand and negotiate until they do understand (Levinson, 2014). Programming languages, on the other hand, are passive: they carry out instructions and give error messages, but they don’t find the code interesting or boring and don’t ask the programmer questions.

Because of this, it is sometimes difficult to think in a programming language, i.e., to formulate instructions comprehensively and unambiguously. The good news is that many programming languages use similar concepts and structures, since they all are based on the principles of computation. This means that it is often quite easy to learn a second programming language after learning the first. Learning a second natural language can take much more effort. One thing is clear - it is becoming increasingly important to learn both kinds of language.

Answer by: Julia Udden, Harald Hammarström and Rick Jansen

Further reading:

The children who learned to use computers without teachers

Levinson, S. C. (2014). Pragmatics as the origin of recursion. In F. Lowenthal, & L. Lefebvre (Eds.), Language and recursion (pp. 3-13). Berlin: Springer.

- What is the similarity between a natural languages and programming languages?

-

Some programming languages certainly look a lot like natural languages. For example, here’s some Python code that searches through a list of names and prints one out if it’s also in a list called invited_people.

Python

for name in my_list: if name in invited_people: print name

However, some other programming languages are much less readable. Here is some code in Scheme that does the same as the code above.Scheme

(map (lambda (name) (if (cond ((member name invited_people) name)) (display name) name)) my_list)

So, how similar or different are natural languages and programming languages really? To answer these questions, we need to understand some central terms that linguists use to describe the structure of languages, rather than just looking at the surface of what looks similar or not.Similar structures of semantics and syntax

Two of the most central concepts in linguistics are the concepts of semantics and syntax. In short, semantics is the linguistic term for meaning, but a more precise explanation is that semantics contains the information connected to concepts. For instance, a word form like “sleep” (spelled S-L-E-E-P, in either letters or pronounced as sounds) designates a certain action of a living organism and that’s the semantics of that word. The syntax on the other hand is the structure of how words of different kinds (e.g. nouns and verbs) can be combined and inflected. The sentence “My ideas sleep” is a well-formed English sentence from the point of view of syntax, but the semantics of this sentence is not well-formed since ideas are not alive and thus cannot sleep. So, semantics and syntax have rules, but semantics relates to meaning and syntax relates to how words can be combined.

Having explained the semantics and syntax of natural languages, let’s turn back to programming. In programming languages, the coder has an intention of what the code should do. That could be called the semantics or the meaning of the code. The syntax of the programming language links a certain snippet of code, including its “words” (that is variables, functions, indices, different kinds of parenthesis etc.) to the intended meaning. The examples of python and scheme above have the same semantics, while the syntax of the two programming languages differ.

Different purposes

We have described many parallels between the basic structure of natural languages and programming languages, but how far does the analogy go? After all, natural languages are shaped by communicative needs and the functional constraints of human brains. Programming languages on the other hand are designed to have the capacities of a Turing machine, i.e. to do every computation that humans can do with pen and paper, again and again.

It is necessary for programming languages to be fixed and closed, while natural languages are open-ended and allow blends. Code allows long lists of input data to be read in, stored and rapidly parsed by shuffling around data in many steps, to finally arrive at some output data. The point is that this is done in a rigorous way. Natural languages on the other hand must allow their speakers to greet each other, make promises, give vague answers and tell lies. New meanings and syntax constantly appear in natural languages and there is a gradual change of e.g. word meanings. A sentence from a spoken language can have several possible meanings. For example, the sentence “I saw the dog with the telescope”, has two possible meanings (seeing a dog through a telescope or seeing a dog that owns a telescope). People use context and their knowledge of the world to tell the difference between these meanings. Natural languages thus depend on an ever changing culture, creating nuances and blends of meanings, for different people in different cultures and contexts. Programming languages don’t exhibit this kind of flexibility in interpretation. In programming languages, a line of code has a single meaning, so that the output can be reproduced with high fidelity.

Answer by: Julia Udden, Harald Hammarström and Rick Jansen

- How do gender articles affect cognition?

-

Languages organize their nouns into classes in various different ways. Some don’t have any of these noun classes (e.g., English: every noun is just ‘it’), some have two (e.g., French: every noun is either masculine or feminine), some have as many as 16 (e.g., Swahili: there are different classes for animate objects, inanimate objects, tools, fruits...). Some languages with two noun classes differentiate between masculine and feminine (e.g., French), some between common and neuter (e.g., Dutch). Clearly, all these languages differ in terms of what might be called their ‘grammatical gender system’. While Western European languages might give the impression that grammatical gender (e.g., whether nouns are male or female) primarily affects the articles placed in front of nouns (e.g., de versus het in Dutch), these differences often affect the noun itself and other words connected to it as well. Polish, for example, doesn’t even have articles (as if the English word ‘the’ didn’t exit) but still uses an intricate gender system which requires adjectives to agree with nouns. The reasons for these differences between languages remain mysterious.

Given that the gender system of a language permeates all sentences, one might wonder whether it goes further and also influences how people think in general. On the face of it, this appears unlikely. A grammatical gender system is just a set of rules for how words change when combined. There is no ‘deeper’ meaning to them. Nonetheless, a series of experiments have come up with surprising results.

In the 1980’s Alexander Guiora and colleagues noticed that two to three year old Hebrew-speaking children (whose language system does differentiate between masculine and feminine nouns) are about half a year ahead in their gender identity development compared to English- speaking children. It is as if the gender distinction found in Hebrew nouns gave these children a hint about a similar gender distinction in the natural world.

Adults too seem to make use of grammatical gender even when it doesn’t make any sense to do so. Roberto Cubelli and colleagues asked people to judge whether two objects were of the same category (e.g., tools or furniture) or not. When the grammatical gender of the objects matched, people were faster in their judgements than when there was a mismatch. This task didn’t require people to name these objects, and yet still they appear to use the arbitrary grammatical classification system of their native language.

Edward Segel and Lera Boroditsky found the influence of grammatical gender even outside the laboratory - in an encyclopedia of classical paintings. They looked at all the gendered depictions of naturally asexual concepts like love, justice, and time. They noticed that these asexual entities (e.g., time) tended to be personified by masculine characters if the grammatical gender was masculine in the painter’s language (e.g., French: ‘le temps’), and vice versa for female characters (e.g., German: ‘die Zeit’). The depicted gender agreed with grammatical gender in 78% of the cases for painters whose mother tongue was ‘gendered’, like Italian, French and German. On top of that, this effect was consistent even when only looking at those concepts with different grammatical genders in the studied languages.

These and similar studies have powerfully shown how a grammatical classification system for nouns affects the view language speakers have on the world. By forcing people to think in certain categories, general thinking habits appear to be affected. This illustrates quite nicely that thought is influenced by what you must say – rather than by what you can say. The grammatical gender effect on cognition highlights the fact that language is not an isolated skill but instead a central part of how the mind works.

Written by Richard Kunert and Gwilym Lockwood

Further reading:

Segel, E., & Boroditsky, L. (2011). Grammar in art. Frontiers in Psychology, 1,1. doi: 10.3389/fpsyg.2010.00244

- Is it unavoidable that regularly using a foreign language will influence our native language?

-

Most people who try to learn a second language, or who interact with non-native speakers notice that the way non-native speakers speak their second language is influenced by their native language. They are likely to have a foreign accent, and they might use inappropriate words or an incorrect grammatical structure, because those words or that structure are used that way in their native language. A lesser known yet common phenomenon is the influence of a foreign language we learn on our native language.

People who start using a foreign language regularly (for example, after moving to a different country) often find themselves struggling to recall words when using their native language. Other common influences are the borrowing of words or collocations (two or more words that often go together). For example, Dutch non-native English speakers might insert English words, for which there is no literal translation, such as native, into a Dutch conversation. Or they may find themselves using a literal translation of the collocation ‘taking a picture’ while speaking in their native language, even if their native language does not use the verb take to express this action. Studies from the past couple of decades show that people show such an influence at all linguistic levels - as described above, they may borrow words or expressions from their second language, but they might also borrow grammatical structures or develop a non-native accent in their own native language.

In general, research has shown that all the languages we speak are always co-activated. This means that when a Dutch person speaks German, not only his German but also his Dutch, as well as any other language that person speaks, are automatically activated at the same time. This co-activation likely promotes cross-linguistic influence.

So will learning a foreign language necessarily influence one's native language at all linguistic levels? To a degree, but there are large individual differences. The influence is larger the more dominant the use of the foreign language is, and in particular if it is regularly used with native speakers of that language (as when moving to a foreign country). The influence also increases with time, so immigrants, for example, are likely to show more influence after 20 years abroad than after 2, although there is also a burst of influence in the initial period of using a foreign language regularly. Some studies also suggest that differences among people in certain cognitive abilities, like the ability to suppress irrelevant information, affect the magnitude of the influence of the second language on the native language. It is important to note though that some of these influences are relatively minor, and might not even be detectable in ordinary communication.

By Shiri Lev-Ari and Hans Rutger Bosker

Further reading:

Cook, V. (Ed.). (2003). Effects of the second language on the first. Clevedon: Multilingual Matters.

- Why do some languages have a writing system that closely represents the way the language is actually spoken while other languages have a less clear writing system?

-

No language has a spelling system (or orthography) which absolutely and completely represents the sounds of that language, but some are definitely better than others. Italian has a shallow orthography, which means that the spelling of the words represent the sounds of Italian quite well (although Sicilian, Sardinian, and Neapolitan Italian speakers may disagree), while English has a deep orthography, which means that spelling and pronunciation don't match so well.

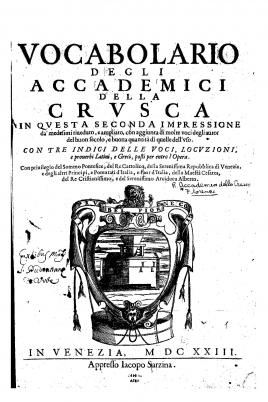

Italian is consistent for two main reasons. Firstly, the Accademia della Crusca was established in 1583 and has spent several centuries since regulating the Italian language; the existence of such an academy has enabled wide-ranging and effective spelling consistency. Secondly, Standard Italian only has five vowels; a, i, u, e, and o, which makes it much easier to distinguish between them on paper. Other examples of languages with five vowel systems are Spanish and Japanese, both of which also have shallow orthographies. Japanese is an interesting case; some words are written using the Japanese characters, which accurately represent the sound of the words, but other words are written with adapted Chinese characters, which represent the meaning of the words and don't represent the sound at all.

French has a deep orthography, but in one direction; while one sound can be written several different ways, there tends to be one specific way of pronouncing a particular vowel or combination of vowels. For example, the sound [o] can be written au, eau, or o, as in haut, oiseau, and mot; however, the spelling eau can only be pronounced as [o].

English, meanwhile, has a very deep orthography, and has happily resisted spelling reform for centuries (interestingly enough, this is not the case in the USA; Noah Webster's American Dictionary of the English Language introduced a successful modern spelling reform programme… or program). One obvious reason is the lack of a formal academy for the English language - English speakers are rather laissez-faire (certainly laissez-faire enough to use French to describe English speakers' attitudes towards English) - but there are several other reasons too.

Formed out of a melting pot of European languages - a dab of Latin and Greek here, a pinch of Celtic and French there, a fair old chunk of German, and a few handfuls of Norse - English has a long and complicated history. Some spelling irregularities in English reflect the original etymology of the words. The unpronounced b in doubt and debt harks back to their Latin roots, dubitare and debitum, while the pronunciation of ce- as "se-" in centre, certain, and celebrity is due to the influence of French (and send and sell are not "cend" and "cell" because they are Germanic in origin).

All languages change over time, but English had a particularly dramatic set of changes to the sound of its vowels in the middle ages known as the Great Vowel Shift. The early and middle phases of the Great Vowel Shift coincided with the invention of the printing press, which helped to freeze the English spelling system at that point; then, the sounds changed but the spellings didn't, meaning that Modern English spells many words the way they were pronounced 500 years ago. This means that the Shakespeare's plays were originally pronounced very differently from modern English, but the spelling is almost exactly the same. Moreover, the challenge of making the sounds of English match the spelling of English is harder because of the sheer number of vowels. Depending on their dialect, English speakers can have as many as 22 separate vowel sounds, but only the letters a, i, u, e, o, and y to represent them; it's no wonder that so many competing combinations of letters were created.

Deep orthography makes learning to read more difficult, as a native speaker and as a second language learner. Despite this, many people are resistant to spelling reform because the benefits may not make up for the loss of linguistic history. The English may love regularity when it comes to queuing and tea, but not when it comes to orthography.

By Gwilym Lockwood & Flora Vanlangendonck

See:

Original Pronunciation in Shakespeare: http://www.youtube.com/watch?v=gPlpphT7n9s

- How do people develop the different skills necessary for language acquisition, and in which order and why?

-

Children usually start babbling at an age of two or three months – first they babble vowels, later consonants and finally, between an age of seven and eleven months, they produce word-like sounds. Babbling is basically used by children to explore how their speech apparatus works, how they can produce different sounds. Along with the production of word-like sounds comes the ability to extract words from a speech input. These are important steps towards the infant’s first words, which are usually produced at an age of around 12 months.

Simple one-word utterances are followed by two-word utterances during the second half of the children’s second year, in which one can already observe grammar. Children growing up learning a language like Dutch or German (subject-object-verb order in subordinate clauses, which are considered to have a stable order) or English (subject-verb-object order) produce their two-sentences in a subject-verb order, such as “I eat”, while learners of languages such as Arabic or Irish (languages with a verb–subject–object order) produce sentences like “eat I”. From then on, there is a rapid acceleration in the infant’s vocabulary growth as sentences also contain more words and get more complex. Grammar is said to have developed by an age of four or five years and by then, children are basically considered linguistic adults. The age at which children acquire these skills may vary strongly from one infant to another and the order may also vary depending on the linguistic environment in which the children grow up. But by the age of four or five, all healthy children will have acquired language. The development of language correlates with different processes in the brain, such as the formation of connective pathways, the increase of metabolic activity in different areas of the brain and myelination (the production of myelin sheaths that form a layer around the axon of a neuron and are essential for proper functioning of the nervous system).

By Mariella Paul and Antje Meyer

Further reading:

Bates E, Thal D, Finlay BL, Clancy, B (1999) Early Language Development and its Neural Correlates, in I. Rapin & S. Segalowitz (Eds.), Handbook of Neuropsychology, Vol. 6, Child Neurology (2nd edition). Amsterdam: Elsevier. (link)

- Homophones – what are they and why do they exist at all?

-

Homophones are words that sound the same but have two or more distinct meanings. This phenomenon occurs in all spoken languages. Take for instance the English words FLOWER and FLOUR. These words sound the same, even though they differ in several letters when written down (therefore called heterographic homophones). Other homophones sound the same and also look the same, such as the words BANK (bench/river bed) and BANK (financial institution) in both Dutch and English. Such words are sometimes called homographic homophones. Words with very similar sounds but different meanings also exist between languages. An example is the word WIE, meaning 'who' in Dutch, but 'how' in German.

One might think that homophones would create serious problems for the hearer or listener. How can one possibly know what a speaker means when she says a sentence like "I hate the mouse"? Indeed, many studies have shown that listeners are a little slower to understand ambiguous words than unambiguous ones. However, in most cases, it is evident from the context what the intended meaning is. The above sentence might for example appear in the contexts of "I don't mind most of my daughter's pets, but I hate the mouse" or "I love my new computer, but I hate the mouse". People normally figure out the intended meaning so quickly, that they don't even perceive the alternative. Preceding linguistic context and common world knowledge thus help us in understanding a speaker’s intended message.

Why do homophones exist? It seems much less confusing to have separate sounds for separate concepts. Linguists take sound change as an important factor that can lead to the existence of homophones. For instance, in the early 18th century the first letter of the English word KNIGHT was no longer pronounced, making it a homophone with the word NIGHT. Also language contact creates homophones. The English word DATE was relatively recently adopted into Dutch, becoming a homophone with the already existing word DEED. Some changes over time thus create new homophones, whereas other changes undo the homophonic status of a word. Now the Dutch verb form ZOUDT (would) is no longer commonly used, the similarly sounding noun ZOUT (salt) is losing its homophonic status.

Finally, a particularly nice characteristic of homophones is that they are often used in puns or as stylistic elements in literary texts. In Shakespeare’s Romeo and Juliet (Act I, Scene IV, line 13-16), Romeo for instances uses a homophone when he refrains from following up his friend Mercutio‘s advice to dance:

Mercutio: Nay, gentle Romeo, we must have you dance. Romeo: Not I, believe me: you have dancing shoes With nimble soles: I have a soul of lead So stakes me to the ground I cannot move.

Among other things, it is such elegant use of homophones that has led to Shakespeare’s literary success.By David Peeters and Antje S. Meyer

Further reading:

Bloomfield, L. (1933). Language. New York: Henry Holt and Company.

Cutler, A., & Van Donselaar, W. (2001). Voornaam is not (really) a homophone: Lexical prosody and lexical access in Dutch. Language and speech, 44(2), 171-195. (link)

Rodd, J., Gaskell, G., & Marslen-Wilson, W. (2002). Making sense of semantic ambiguity: Semantic competition in lexical access. Journal of Memory and Language, 46(2), 245-266. (link)

Tabossi, P. (1988). Accessing lexical ambiguity in different types of sentential contexts. Journal of Memory and Language, 27(3), 324-340. (link)

- How does dyslexia arise?

-

When a child has significant difficulties in learning to read and/or spell despite normal general intelligence and overall sensory abilities, then he or she may be diagnosed with developmental dyslexia. This condition was first described in the 1890's and referred to as 'congenital word blindness', because it was thought to result from problems with processing of visual symbols. Over the years it has become clear that visual deficits are not the core feature for most people with dyslexia. In many cases, it seems that subtle underlying difficulties with aspects of language could be contributing. To learn to read, a child needs to understand the way that words are made up by their individual units (phonemes), and must become adept at matching those phonemes to arbitrary written symbols (graphemes). Although the overall language proficiency of people with dyslexia usually appears normal, they often perform poorly on tests that involve manipulations of phonemes and processing of phonology, even when this does not involve any reading or writing.

Since dyslexia is defined as a failure to read, without being explained by an obvious known cause, it is possible that this is not one single syndrome, but instead represents a cluster of different disorders, involving distinct mechanisms. However, it has proved hard to clearly separate dyslexia out into subtypes. Studies have uncovered quite a few convincing behavioural markers (not only phonological deficits) that tend to be associated with the reading problems, and there is a lot of debate about how these features fit together into a coherent account. To give just one example, many people with dyslexia are less accurate when asked to rapidly name a visually-presented series of objects or colours. Some researchers now believe that dyslexia results from the convergence of several different cognitive deficits, co-occurring in the same person.

It is well established that dyslexia clusters in families and that inherited factors must play a substantial role in susceptibility. Nevertheless, there is no doubt that the genetic basis is complex and heterogeneous, involving multiple different genes of small effect size, interacting with the environment. Genetic mapping efforts have already enabled researchers to pinpoint a number of interesting candidate genes, such as DYX1C1, KIAA0319, DCDC2, and ROBO1, and with dramatic advances in DNA sequencing technology there is much promise for discovering others in the coming years. The neurobiological mechanisms that go awry in dyslexia are largely unknown. A prominent theory posits disruptions of a process in early development, a process in which brain cells move towards their final locations, known as neuronal migration. Indirect supporting evidence for this hypothesis comes from studies of post-mortem brain material in humans and investigations of functions of some candidate genes in rats. But there are still many open questions that need to be answered before we can fully understand the causal mechanisms that lead to this elusive syndrome.

By Simon Fisher

Further reading:

Carrion-Castillo, A., Franke, B., & Fisher, S. E. (2013). Molecular genetics of dyslexia: an overview. Dyslexia, 19, 214–240. (link)

Demonet, J. F., Taylor, M. J., & Chaix, Y. (2004). Developmental dyslexia. Lancet, 63, 1451–1460 (link)

Fisher, S. E. & Francks, C. (2006). Genes, cognition and dyslexia: learning to read the genome. Trends in Cognitive Science, 10, 250-257.(link)

- Do signers from different language backgrounds understand each other’s signs?

-

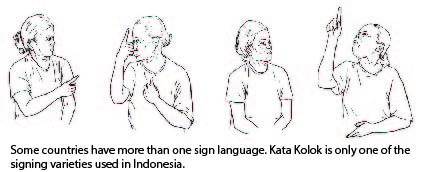

When we tell people we investigate the sign languages of deaf people, or when people see us signing, they often ask us whether sign language is universal. The answer is that nearly every country is home to at least one national sign language which does not follow the structure of the dominant spoken language used in that country. So British Sign Language and American Sign Language in fact look really different. Chinese Sign Language and Sign Language of the Netherlands, for example, also have distinct vocabularies, deploy different fingerspelling systems, and have their own set of grammatical rules. At the same time, a Chinese and Dutch deaf person, who do not have any shared language, manage to bridge this language gap with relative ease when meeting for the first time.

This kind of ad hoc communication is also known as cross-signing. In collaboration with the International Institute for Sign Languages and Deaf Studies - iSLanDS, we are conducting a study of how cross-signing emerges among signers of varying countries for the first time. The recordings include signers from countries such as South Korea, Uzbekistan, and Indonesia. Our initial findings are that the communicative success in these dialogues is linked to the interlocutors’ ability to accommodate to their communicative partner by using creative signs that are not found in their own sign languages. This linguistic creativity often capitalizes on the depictive properties of visual signs (e.g. gesturing a circle in the air to talk about a round object, or representing a man by indicating a moustache), but also on general principles of interaction, e.g. repeating a sign to request more information.Cross-signing is distinct from International Sign, which is used at international deaf meetings such as the World Federation of the Deaf (WFD) congress or the Deaflympics. International Sign is strongly influenced by signs from American Sign Language and is usually used to present in front of international deaf audiences who are familiar with its lexicon. Cross-signing, on the other hand, emerges in interaction among signers without knowledge of each other's native sign languages.

By Connie de Vos, Kang-Suk Byun & Elizabeth Manrique

Suggestion for further reading:

Information on differences and commonalities between different sign languages, and between spoken and signed languages by the World Federation of the Deaf: (link)

Mesch, J. (2010). Perspectives on the Concept and Definition of International Sign. World Federation of the Deaf. (link)

Supalla, T., & Webb, R. (1995). The grammar of International Sign: A new look at pidgin languages. In K. Emory and J. Reilly (Eds.), Sign, Gesture and Space. (pp.333-352) Mahwah, NJ: Lawrence Erlbaum.

- Is body language universal?

-

Our bodies constantly communicate in various ways. One form of bodily communication is what we traditionally consider as “body-language”. In the context of social interactions, our body expresses attitudes and emotions influenced by the dynamics of the interaction, interpersonal relations and personality (see also answer to the question "What is body language?"). These bodily messages are often considered to be transmitted unwittingly. Because of this, it would be difficult to teach a universal shorthand suitable for expressing the kind of things considered to be body language; however, at least within one culture, there seems to be a great deal of commonality in how individuals express attitudes and emotions through their body.

Another form of bodily communication is the use of co-speech gesture. Co-speech gestures are movements of the hands, arms, and occasionally other body parts that interlocutors produce while talking. Importantly, co-speech gestures express meaning that is closely tied to the meaning communicated by the speech that they accompany, thus contrasting crucially with the kind of signals that constitute ‘body language’. Because speech and gesture are so tightly intertwined, co-speech gestures are only very rarely fully interpretable in the absence of speech. As such, co-speech gestures do not help communication much if interlocutors do not speak the same language. Further, co-speech gestures are shaped by the culture in which they are embedded (and therefore differ between cultures, at least to some extent), and there is no standard of form that applies to these gestures even within one culture; they are each person’s idiosyncratic creation at the moment of speaking (although there is overlap in how different people use gesture to represent meaning, there are also substantial differences). As such, co-speech gestures are the opposite of a universal nonverbal ‘code’ that could be used when interlocutors do not share a spoken language.

What people often tend to resort to when trying to communicate without a shared language are pantomimic gestures, or pantomimes. These gestures are highly iconic in nature (like some iconic co-speech gestures are), meaning that they map onto structures in the world around us. Even when produced while speaking, these gestures are designed to be understandable in the absence of speech. Without a shared spoken language, they are therefore more informative than co-speech gestures. As long as we share knowledge about the world around us - for instance about actions, objects, and their spatial relations - these gestures tend to be communicative, even if we don’t share the same language.

An important distinction has to be made between these pantomimic gestures that can communicate information in the absence of speech and sign languages. In contrast to pantomimes, sign languages of deaf communities are fully-fledged languages consisting of conventionalised meanings of individual manual forms and movements which equate to the components that constitute spoken language. There is not one universal sign language: different communities have different sign languages (Dutch, German, British, French or Turkish sign languages being a small number of examples).

By Judith Holler & David Peeters

Further reading:

Kendon, A. (2004). Gesture: Visible action as utterance. Cambridge University Press.(link)

McNeill, D. (1992). Hand and mind: What gestures reveal about thought. Chicago University press. (link book review)

- Is there a language gene that other species do not have?

-

Language appears to be unique in the natural world, a defining feature of the human condition. Although other species have complex communication systems of their own, even our closest living primate relatives do not speak, in part because they lack sufficient voluntary control of their vocalizations. After years of intensive tuition, some chimpanzees and bonobos have been able to acquire a rudimentary sign language. But still the skills of these exceptional cases have not come close to those of a typical human toddler, who will spontaneously use the generative power of language to express thoughts and ideas about present, past and future.

It is certain that genes are important for explaining this enigma. But, there is actually no such thing as a "language gene" or "gene for language", as in a special gene with the designated job of providing us with the unique skills in question. Genes do not specify cognitive or behavioural outputs; they contain the information for building proteins which carry out functions inside cells of the body. Some of these proteins have significant effects on the properties of brain cells, for example by influencing how they divide, grow and make connections with other brain cells that in turn are responsible for how the brain operates, including producing and understanding language. So, it is feasible that evolutionary changes in certain genes had impacts on the wiring of human brain circuits, and thereby played roles in the emergence of spoken language. Crucially, this might have depended on alterations in multiple genes, not just a single magic bullet, and there is no reason to think that the genes themselves should have appeared "out of the blue" in our species.

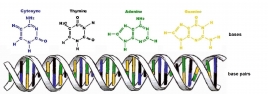

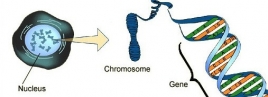

There is strong biological evidence that human linguistic capacities rely on modifications of genetic pathways that have a much deeper evolutionary history. A compelling argument comes from studies of FOXP2 (a gene that has often been misrepresented in the media as the mythical "language gene"). It is true that FOXP2 is relevant for language – its role in human language was originally discovered because rare mutations that disrupt it cause a severe speech and language disorder. But FOXP2 is not unique to humans. Quite the opposite, versions of this gene are found in remarkably similar forms in a great many vertebrate species (including primates, rodents, birds, reptiles and fish) and it seems to be active in corresponding parts of the brain in these different animals. For example, songbirds have their own version of FOXP2 which helps them learn to sing. In-depth studies of versions of the gene in multiple species indicate it plays roles in the ways that brain cells wire together. Intriguingly, while it has been around for many millions of years in evolutionary history, without changing very much, there have been at least two small but interesting alterations of FOXP2 that occurred on the branch that led to humans, after we split off from chimpanzees and bonobos. Scientists are now studying those changes to find out how they might have impacted the development of human brain circuits, as one piece of the jigsaw of our language origins.

By Simon Fisher, Katerina Kucera & Katherine Cronin

Further reading:

Revisiting Fox and the Origins of Language (link)

Fisher S.E. & Marcus G.F. (2006) The eloquent ape: genes, brains and the evolution of language. Nature Reviews Genetics, 7, 9-20. (link)

Fisher, S.E. & Ridley, M. (2013). Culture, genes, and the human revolution. Science, 340, 929-930.(link)

- Is there a quick method to build my English vocabulary?

-

Learning a new language is not easy, largely because of the heavy burden on memory. A universal “best practice” for everyone probably does not exist, but vocabulary memorization can become more efficient with the help of some good strategies.

The conventional method of building up a vocabulary from scratch is remembering the words in the target language by translating to one’s own language. This might be advantageous if the new language and the person’s mother tongue are related, such as Dutch and German, but proves too indirect for unrelated languages, as for English and Chinese for instance. The learning process becomes more efficient when the translation step is removed and the new words are directly linked to the actual objects and actions. Many highly skilled second language speakers frequently run into words whose exact translations do not even exist in their native language, demonstrating that those words were not learned by translation, but from context in the new language.

To skip the translation step early in the language learning process, it is helpful to visualize what it was like when these words were said in in the learner’s native language and link the response to the new words. The idea is to mimic how a child learns a new language. Another way to build a vocabulary quicker is by grouping things that are conceptually related and practicing them at the same time. For example, naming things and events related to transportation as one is getting home from work, or naming objects on the dinner table. The key is to make the new language making “direct sense” instead of trying to understand it through a familiar media such as the native language. In a bit more advanced stage of building a vocabulary, one can use a dictionary in the target language, such as Thesaurus in English, to find the meaning of new words, rather than a language-to-language dictionary.

Employing a method called “Spaced Learning” may also be beneficial. Spaced Learning is a timed routine, in which new material (such as a set of new words in a studied language) is introduced, reviewed, and practiced in three timed blocks with two 10 minute breaks. It is important that distractor activities that are completely unrelated to the studied material, such as physical exercises, are performed during those breaks. It has been demonstrated in laboratory experiments that such repeated stimuli, separated by timed breaks, can initiate long-term connections between neurons in the brain and result in long-term memory encoding. These processes occur in minutes, and have been observed not only in humans, but also in other species.

It is inevitable to forget when we are learning new things and so is making mistakes. The more you use the words that you are learning, the better you will remember them.

Written by Sylvia Chen & Katerina Kucera

Further reading:

Kelly P. & Whatson T. (2013). Making long-term memories in minutes: a spaced learning pattern from memory research in education. Frontiers of Human Neuroscience, 7, 589. (link)

- Do languages tend to become more similar over time or do they become more different?

-

A basic assumption of language change is that if two linguistic groups are isolated from each other, then their languages will become more different over time. If the groups come into contact again, then they may become more similar to each other, for instance by borrowing parts of each others’ languages. This is the case in many parts of the world where aspects of language have been borrowed into many different languages. A recent example is the word ‘internet’ which has been adopted by many languages. However, before global communication was possible, borrowing was restricted to languages in the same geographic area. This might cause languages in the same area to become more similar to each other. Linguists call these ‘areal effects’, and they are influenced by factors such as migration or a group being more powerful or prestigious.

Another possibility that would make languages more similar is if there were certain words or linguistic rules were easier to learn or somehow ‘fit’ the human brain better. This is similar to different biological species sharing similar traits, such as birds, bats and some dinosaurs evolving wings. In this case, languages that were even on opposite sides of the world might change to become more similar. Researchers have noticed some features in words across languages which seem to make a direct link between a word's sound and its meaning (this is known as sound-symbolism). For instance, words for ‘nose’ tend to involve sounds made with the nose such as [n]. Languages all over the world use a similar word to question what someone else has said – ‘huh?’ or ‘what?’ – possibly because it’s a short, question-like word that’s effective at getting people’s attention.

Telling the difference between these effects and the similarities caused by borrowing is difficult because their effects can be similar. One of the goals of evolutionary linguistics is to find ways of teasing these effects apart.

This question strikes at the heart of linguistic research, because it involves asking whether there are limits on what languages can be like. Some of the first modern linguistic theories suggested that there were limits on how different languages could be because of biological constraints. More recently, field linguists have been documenting many languages that show a huge diversity in their sounds, words and rules. It may be the case that for every way in which languages become more similar, there is another way in which they become more different at the same time.

By Seán Roberts & Gwilym Lockwood

Some links:

Can you tell the difference between languages? (link)

Why is it studying linguistic diversity difficult? (link)

Is ‘huh?’ a universal word? (link)Further reading:

Nettle, D. (1999). Linguistic Diversity. Oxford: Oxford University Press.

Dingemanse, M., Torreira, F., & Enfield, N. J. (in press). Is “Huh?” a universal word? Conversational infrastructure and the convergent evolution of linguistic items. PLoS One. (link)

Dunn, M., Greenhill, S. J., Levinson, S. C., & Gray, R. D. (2011). Evolved structure of language shows lineage-specific trends in word-order universals. Nature, 473, 79-82. (link)

- What do researchers mean when they talk about "maternal language"?

-

Image: Eoin Dubsky When researchers talk about "maternal language", they talk about child-directed language. Modern research on the way in which caregivers talk to children started in the late seventies. Scholars who study language acquisition were interested in understanding how language learning is influenced by the way caregivers talk to their children. Since the main caregivers were usually mothers, most first studies focused on maternal language (often called Motherese), which was usually described as having higher tones, a wider tonal range and a simpler vocabulary. However, we now understand that the ways in which mothers modify their speech for their children are extremely variable. For example, some mothers use a wider tone range than usual, but many mothers use nearly the same tone range they use with adults. Mothers’ speech also changes to adapt to their children’s language abilities, so maternal speech can be very different from one month to the next.

Also, in some cultures mothers use little or no “baby talk” when interacting with their children. Because of this, it is impossible to define a universal maternal language. It’s also important to remember that fathers, grandparents, nannies, older siblings, cousins, and even non-family members often modify their speech when they address a young child. For this reason, researchers today prefer to use the label “child-directed speech” instead of "maternal language" or “motherese”. Some recent studies also show that we do more than just modify our speech for children: we also modify our hand gestures and demonstrative actions, usually making them slower, bigger, and closer to the child. In sum, child-directed language seems to be only one aspect of a broader communicative phenomenon between caregivers and children.

By Emanuela Campisi, Marisa Casillas & Elma Hilbrink

Further reading:

Fernald, A., Taeschner, T., Dunn, J., Papousek, M., de Boysson-Bardies, B., & Fukui, I. (1989). A cross-language study of prosodic modifications in mothers' and fathers' speech to preverbal infants. Journal of Child Language, 16, 477–501.

Rowe, M. L. (2008). Child-directed speech: relation to socioeconomic status, knowledge of child development and child vocabulary skill. Journal of Child Language, 35, 185–205.

- As devices get closer to imitating human brain activity, will we get to the point that implants (brain or otherwise) will enable voiceless communication?

-

Image: Ars Electronica Our current understanding of the human brain holds that it is an information processor, comparable to a computer that enables us to cope with an extremely challenging environment. As such, it would be, theoretically, possible to extract the information the brain contains, through what is known as a `brain-computer interface’ (BCI): By measuring neural activity and applying sophisticated, self-learning computer algorithms, researchers are now able to extract and reproduce, for instance, image data that is being processed by the visual cortex. Such methods allow researchers to take a snap-shot of what someone is actually seeing though his or her own eyes.

With regards to language, recent studies have shown promising results as well. Brain imaging data was used to establish what kind of stimuli (spoken, written, photographs, natural sounds) people were observing in a task where they had to discriminate between animals and tools; researchers were able to determine the kind of stimulus by merely looking at a person’s brain activity. More invasive recordings have also demonstrated that we can extract information about speech sounds people are hearing and producing. This body of research suggests that it may be possible in the not-too-distant future to develop a neural prosthetic that would allow people to generate speech in a computer by just thinking about speaking.

When discussing voiceless communication, however, the application of BCI is still particularly challenging for a few reasons. First, human communication is substantially more than simply speaking and listening, and there are a whole host of other signals that we use to communicate (e.g., gestures, facial expression and other forms of non-verbal communication). Secondly, while BCIs to date have focused on extracting information from the brain (e.g., to-be-articulated syllables), inserting information into the brain would require more direct manipulation of brain activity. Cochlear implants represent one approach where we have been able to directly present incoming auditory information to auditory nerve cells in the ear in order to help congenitally deaf individuals hear. Such stimulation, however, still does not amount to directly stimulating the brain in the full richness needed for everyday communication. So, while both `reading’ and `writing’ language data from and to the brain with the aim to enable voiceless communication is theoretically possible, both are -extremely- challenging and would require tremendous advances in fields such as linguistics, neuroscience, computer science and statistics.

By Dan Acheson & Rick Janssen

Further reading:

[1] Schoenmakers, S., Barth, M., Heskes, T., & van Gerven, M. A. J. (2013). Linear Reconstruction of Perceived Images from Human Brain Activity. NeuroImage, 83, 951-961.(link)

[2] Simanova, I., Hagoort, P., Oostenveld, R., van Gerven, M.A.J. (2012). Modality-Independent Decoding of Semantic Information from the Human Brain. Cerebral Cortex, doi:10.1093/cercor/bhs324.(link)

[3] Chang, E. F., Niziolek, C. A., Knight, R. T., Nagarajan, S. S., & Houde, J. F. (2013). Human cortical sensorimotor network underlying feedback control of vocal pitch.Proceedings of the National Academy of Sciences, 110, 2653-2658. (link)

[4] Chang, E. F., Rieger, J. W., Johnson, K., Berger, M. S., Barbaro, N. M., & Knight, R. T. (2010). Categorical speech representation in human superior temporal gyrus. Nature neuroscience, 13, 1428-1432.(link)

[5] Bouchard, K. E., Mesgarani, N., Johnson, K., & Chang, E. F. (2013). Functional organization of human sensorimotor cortex for speech articulation. Nature, 495, 327-332. (link)

- What is the difference between surface and deep layer in language?

-

Image: Duncan Rawlinson The terms "surface layer" and "deep layer" refer to different levels that information goes through in the language production system. For example, imagine that you see a dog chasing a mailman. When you encode this information, you create a representation that includes three pieces of information: a dog, a mailman, and the action chasing. This information exists in the mind of the speaker as a "deep" structure. If you want to express this information linguistically, you can, for example, produce a sentence like "The dog is chasing the mailman." This is the "surface" layer: it consists of the words and sounds produced by a speaker (or writer) and perceived by a listener (or reader). You can also produce a sentence like "The mailman is being chased by a dog" to describe the same event -- here, the order in which you mention the two characters (the "surface" layer) is different from the first sentence, but both sentences are derived from the same "deep" representation. Linguists propose that you can perform movement operations to transform the information encoded in the "deep" layer into the "surface" layer, and refer to these movement operations as linguistic rules. Linguistic rules are part of the grammar of a language and must be learned by speakers in order to produce grammatically correct sentences.

Rules exist for different types of utterances. Other examples of rules, or movement operations between "deep" and "surface" layers, include declarative sentences (You have a dog) and their corresponding interrogative sentences (Do you have a dog?). Here, the movement operations include switching the order of the first two words of the sentence.

By Gwilym Lockwood & Agnieszka Konopka

Further reading:

Chomsky, N. (1957). Syntactic Structures. Mouton.

Chomsky, N. (1965). Aspects of the Theory of Syntax. MIT Press. - Why do we cry out 'ouch' when we're in pain?

-

There are actually two questions hidden in this one. For a clear answer we can best treat them separately:

(1) Why do we cry when we are in sudden pain?

(2) Why do we say ‘ouch’ and not something else?

With regard to the first question, let’s start by noting that we’re not alone: a lot of other animals also cry when in pain. Why? Darwin, who wrote a book in 1872 about emotions in humans and animals, thought it had to do with the fact that most animals experience strong muscle contractions when they’re in pain — a ritualized version of quickly escaping a painful stimulus. Still, why should this be accompanied with an actual cry? Research has since shown that pain cries also have communicative functions in the animal kingdom: for example to alarm others that there is danger, to call for help, or to prompt caring behavior. That last function already starts in the first seconds of our life, when we cry and our mothers caringly take us in their arms. Human babies, and in fact the young of many mammals, are born with a small repertoire of instinctive cries. The pain cry in this repertoire is clearly recognizable: it has a sudden start, a high intensity and a relatively short duration. Here we already see the contours of our ‘ouch’. Which brings us to the second part of our question.

Why do we say ‘ouch’ and not something else? Let us first take a more critical look at the question. Do we never shout anything different? Do we utter a neat ‘ouch’ when we hit our thumb with a hammer or could it also be ‘aaaah!’? In reality there is a lot of variation. Still, the variation is not endless: no one shouts out ‘bibibibi’ or ‘vuuuuu’ when in pain. Put differently, pain cries are variations on a theme — a theme that starts with an ‘aa’ sound because of the shape of our trachea when our mouth is wide open, and then sounds like ‘ow’ or ‘ouch’ when our mouth quickly returns to being closed. The word ‘ouch’ is a perfectly fine summary of this theme — and here we touch on an important function of language. Language helps us share experiences which are never exactly the same and yet can be categorised as similar. That is useful, because if we want to talk about “someone crying ouch” we don’t always need or want to imitate the cry. In that sense ‘ouch’ is more than just a pain cry: it is a proper word.

Does this all imply that ‘ouch’ may be the same in every language? Almost, but not quite, since each language will use its own inventory of sounds to describe a cry of pain. In German and Dutch it is ‘au’, in Israeli it is ‘oi’, and in Spanish it’s ‘ay’ — at least that is how Byington described it in 1942 in one of the first comparisons of these words.

Each of us is born with a repertoire of instinctive cries and then learns a language in addition to it. This enables us to do more than scream and cry: we can also talk about it. Which is a good thing, for otherwise this answer could not have been written.

Originally written in Dutch by Mark Dingemanse and published in "Kennislink Vragenboek".

Translated by Katrien Segaert and Judith Holler - Why do we need orthography?

-

Image: JNo Imagine if someone told you to write a message to a friend using only number and punctuation symbols. Perhaps you could decide on a way to use these signs to express the sounds of your language. But if you wrote a message using your new ‘alphabet’, how would your friend be able to decode it? The two of you would need to have already agreed on a shared way to use numbers and punctuation signs to write words. That is, you would need to have designed a shared orthography.

Like a key to a code, an orthography is a standard way of linking the symbols of an alphabet (or other script) to the sounds of a language. Having an orthography means that speakers of the same language can communicate with each other using writing. The shapes of the symbols that we choose don’t matter - what is important is that we understand how those symbols (graphemes) represent the sounds (phonemes) that we use in our spoken language.

Even languages that use the same script have different sounds, and thus different orthographies. The Dutch and English orthographies use symbols of the Roman alphabet, and share many sound-grapheme correspondences - for example, the letters ‘l’ and the sounds ‘l’ in the English word light and the Dutch word licht are pretty much the same. However, the letter ‘g’ stands for a different sound in the English orthography (e.g., in good) than it does in the Dutch orthography (goed). Readers of Dutch or English need to know this in order to link the written word forms to the spoken forms.

There are different ideas about what makes a ‘good’ orthography. General principles are that it should consistently represent all and only the distinctive sound contrasts in the language, with the fewest possible symbols and conventions. However, few established orthographies stick to this ideal - you only have to look at English spelling to realise that! Compare, for instance, the pronunciations of pint and print. Quirks and inconsistencies in orthographies can also have their own advantages, such as preserving historical information, highlighting cultural affiliations, and supporting dialect variation.

Written by Lila San Roque & Antje Meyer

More information:

Online encyclopedia of writing systems and languages (link)

The Endangered Alphabets Project (link)

Scriptsource: Writing systems, computers and people (link) - Why is English the universal language?

-

Image: Jurriaan Persyn Unlike most of the other answers here, language itself doesn't really come into it; English is perceived by many people as the universal language because of the former influence of the British Empire and the current influence of American political and economic hegemony.

It is possible to try giving a strictly linguistic explanation; it could be that English is a simple language which is relatively easy to pick up. English has no noun genders, no complicated morphology, no tone system, it is written in the Roman alphabet which is pretty good at accurately mapping sounds to symbols, and the prevalence of English-language films, TV, and music makes it readily accessible and easy to practise. However, English also has an extensive vocabulary, a highly inconsistent spelling system, many irregular verbs, some problematic sounds such as "th", and a large inventory of vowels which can make it difficult for non-native speakers to tell words apart. Arguments about which languages are easy or difficult to learn are ultimately circular, as the perception of what is easy and what is difficult to learn depends on the person doing the learning.

The second explanation is historical. The UK was the first industrialised nation, and discovered that one of the advantages to this was that they could colonise the rest of the developing world far faster than other European countries could. The British Empire covered a quarter of the globe at its largest, including North America, the Caribbean, Australia, New Zealand, much of West and Southern Africa, South Asia, and parts of South-East Asia. The UK set up English-speaking systems of government, industry, and exploitation in these areas, which established English as the language of global power in the industrial era. The British Empire finally fell apart after the Second World War, but the 20th century saw the transfer of power from one English-speaking expansionist power to another. The USA's cultural, economic, political, and military domination of the 20th and 21st centuries has ensured that English remains the most important and influential global language. As the official language of business, science, diplomacy, communications, and IT (not to mention the main language of the most popular websites), this is unlikely to change any time soon.

Written by Gwilym Lockwood & Katrin Bangel

- What was language like in the very beginning?

-

Image: Alexandre Duret-Lutz Nobody knows! This is one of the biggest problems in the field of language evolution. Unlike stone tools or skeletons, the language that people use doesn't fossilise, so we can’t study it directly. We have examples of writing from over 6,000 years ago which can help us work out what languages were like relatively recently, but recent research suggests that people have been using languages very much like our own for maybe 500,000 years.

In order to get an idea of how languages might have evolved, researchers use model systems. These might include computational models, experiments with real people or the study of languages that have developed very recently such as sign languages or creoles. We can’t know for sure what the first languages would have sounded like, but we can work out how communication systems tend to evolve and develop.

For example, one series of experiments studied how communication systems develop by using a game like Pictionary: players had to communicate about meanings by drawing. They found that negotiation and feedback during communication was very important for a successful communication system to develop.

Another experiment used a chain of learners who had to learn an artificial language. The first learner had to learn a language with no rules, then teach the next person in the chain whatever they could remember. This second person tried to learn the language and then passed it on to the third person in the chain, just like a game of ‘broken telephone’. In this way, we can simulate the process of passing a language down through the generations, but now it only takes one afternoon rather than thousands of years. What researchers noticed is that the language changed from having a different word for every possible meaning to having smaller words that referred to parts of the meaning that could be put together. That is, a language with rules emerged.

So, early languages may have had a different word for every meaning and gradually broken them apart to create rules. However, other researchers think that languages started with small words for every meaning and gradually learned to stick them together. Some think that language evolved very suddenly, while others think it evolved slowly. Some think that parts of language evolved in many different places at the same time, and contact between groups brought the ideas together. Some think that early languages would have sounded more like music. There are many suggestions that the first languages were signed languages, and that spoken language developed later.

Researchers in this field have to find creative ways of studying the problem and integrate knowledge from many fields such as linguistics, psychology, biology, neuroscience and anthropology.

Written by Seán Roberts & Harald Hammarström

Experiments on language evolution:

Communicating with slide whistles (link)

The iterated learning experiments (link)

Blog posts on language evolution experiments (link1, link2)Further reading:

Galantucci, B. (2005). An Experimental Study of the Emergence of Human Communication Systems. Cognitive Science, 29, 737-767. (link)

Hurford. J. (forthcoming). Origins of language: A slim guide. Oxford University Press.

Johansson. S. (2005), Origins of Language: Constraints on hypotheses. Amsterdam: John Benjamins.

Kirby, S., Cornish, H. & Smith, K. (2008). Cumulative cultural evolution in the laboratory: An experimental approach to the origins of structure in human language. Proceedings of the National Academy of Sciences of the United States of America, 105,10681–10686. (link)

- How does manipulating through language work?

-

Image: Doctor-Major There are many ways to manipulate someone else: focusing on positive words while hiding negative information (‘95% fat-free’ rather than ‘5% fat’), giving neutral information an emotional twist through language melody, hiding important information in sentence positions which people usually don’t pay much attention to, etc. Language offers many instruments for manipulation. In this answer I will focus on how metaphors can be used in this way.

One way to manipulate someone else is by talking about one topic openly while raising another topic below the radar. The effect of this strategy is that the listener’s judgment is directed in a suitable direction. Stanford Psychologists Paul Thibodeau and Lera Boroditsky have investigated how this can be done. They presented participants with crime statistics which were embedded in a text which treated crime either as a beast (‘preying on a town’, ‘lurking in the neighbourhood’) or instead as a virus (‘infecting a town’, ‘plaguing the neighbourhood’). When participants were asked what to do about the crime problem, those who were exposed to the idea of crime as a beast were more likely to suggest law enforcement actions such as to capture criminals, enforce the law or punish wrong doers. In contrast, participants who were presented with crime as a virus often opted for reform-measures such as to diagnose, treat or inoculate crime. Remarkably, the effect of embedding the same information in different contexts had a bigger effect than the usual factors associated with opinions on how to address crime. A conservative political affiliation or male gender biases people towards the enforcement side but not nearly as strongly as slipping in a beast-metaphor.

Why don’t people realize that metaphors should not be taken literally? Crime was never meant as an actual animal or an actual virus, of course. And people presumably understood that. However, in order to process the metaphors, first their primary meaning gets activated regardless of whether the context demands it or not. This can be shown with brain research. A team of Cambridge neuroscientists led by Veronique Boulenger presented participants with sentences such as ‘He grasped the idea’ and compared the activation pattern to sentences such as ‘He grasped the object.’ It turns out that hand-related brain areas were activated in both kinds of sentences even though grasping an idea has nothing to do with actual grasping.

Manipulating through language can thus exploit the fact that language users cannot help but interpret the primary meaning of words. This way, manipulating through language can work via the use of metaphors. This, in turn, can even be exploited to influence something as politically sensitive as how to fight crime.

Written by Richard Kunert & Diana Dimitrova

Further reading:

Boulenger, V., Hauk, O., & Pulvermüller, F. (2009). Grasping Ideas with the Motor System: Semantic Somatotopy in Idiom Comprehension. Cebrebral Cortex, 19, 1905-1914. (link)

Thibodeau, P.H., & Boroditsky, L. (2011). Metaphors We Think With: The Role of Metaphor in Reasoning. Plos One, 6, e16782. (link)

- What is the connection between movement and language?

-

Speaking requires planning and executing rapid sequences of movements. Several muscle systems are involved in the production of speech sounds. Not only the tongue, lips and jaw, but also the larynx and respiration muscles work together in coordination when we speak. As for any other movement, motor planning and sensorimotor control are essential for speaking.

In children, a tight relation between fine motor skills and language proficiency has been demonstrated. That is why speech therapists encourage sensory rich activities like finger painting, water or sand play, and manipulations involving small objects (coloring, buttoning, etc.) in children with speech delays. Such activities help to form new neural connections that are necessary for planning movement sequences and controlling fine-grain movements. For the same reason hand exercises can be beneficial as a part of complex therapy for speech and language recovery after a stroke or brain damage, in cases when language problems are caused by impaired articulation or motor control.